Data flows. Even when data comes to rest, gets sent to backup and possibly finds itself in difficult-to-retrieve long-term storage retirement locations, data generally flows from one place to another during its lifetime.

When data is in motion, it typically moves between applications and their dependent services. But data obviously also moves between applications and operating systems, between application components, containers and microservices — and, in the always-on era of cloud and the web — between application programming interfaces.

Today we know that data flows to such an extent that we now talk about data streaming, but what is it and how do we harness this computing principle?

What is data streaming?

A key information paradigm for modern IT stacks, data streaming denotes and describes the movement of data through and between the above-referenced channels in a time-ordered sequence. A close cousin to the concept of computing events, where asynchronous log files are created for every keyboard stroke, mouse click or IoT sensor reading, data streaming oversees system activity related to areas where data flows are generally rich and large.

SEE: Hiring Kit: Database engineer (TechRepublic Premium)

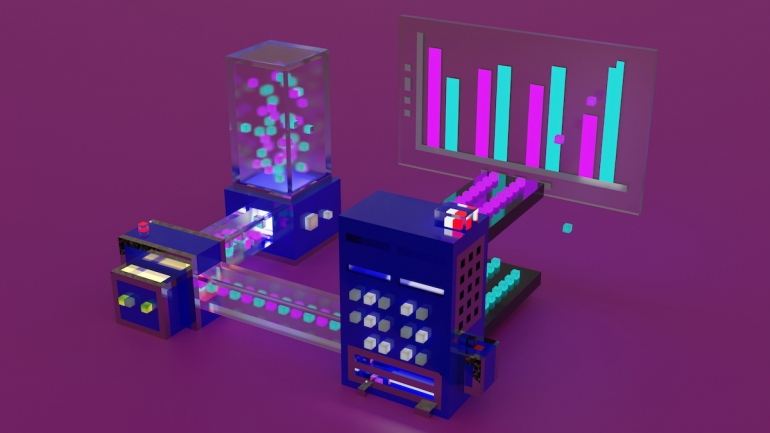

Confluent is a data streaming platform with a self-proclaimed mission to set data in motion. As complex as data streaming engineering sounds, the team has now created Confluent Stream Designer, a visual interface that is said to enable software developers to build streaming data pipelines in minutes.

Designing data streams

Confluent offers a simple point-and-click user interface, but it’s not necessarily a point-and-click UI for you and your favorite auntie or uncle. This is a point-and-click UI that is hoped to be something of an advancement toward making data streams accessible to developers beyond specialized Apache Kafka experts.

Apache Kafka is an open source distributed event streaming platform created by Confluent co-founder and CEO Jay Kreps and his colleagues Neha Narkhede and Jun Rao while the trio worked at LinkedIn. Confluent offers a cloud-native foundational platform for real-time data streaming from multiple sources designed to be the “intelligent connective tissue” for software-driven backend operations that delivers rich front-end user functions.

The theory behind Confluent Stream Designer is that with more teams able to rapidly build and iterate streaming pipelines, organizations can quickly connect more data throughout their business for more agile development alongside better and faster in-the-moment decision-making.

At the Current 2022: The Next Generation of Kafka Summit in Texas, there was the opportunity to speak directly with Confluent about its views and ambitions.

“We are in the middle of a major technological shift, where data streaming is making real time the new normal, enabling new business models, better customer experiences and more efficient operations,” said Kreps. “With Stream Designer we want to democratize this movement towards data streaming and make real time the default for all data flow in an organization.”

Streaming moves in from the edge

Kreps and the team further state that streaming technologies that were once at the edges have become the core of critical business functions.

Because traditional batch processing can no longer keep pace with the growing number of use cases that depend on millisecond updates, Confluent says that more organizations are pivoting to streaming, as their livelihood is defined by the ability to deliver data instantaneously across customer experiences and business operations.

As something of a de facto standard for data streaming today, Kafka is said to enable some 80% of Fortune 100 companies to handle large volumes and varieties of data in real-time.

But building streaming data pipelines on the open source Kafka requires large teams of highly specialized engineering talent and time-consuming work across multiple tools. This puts pervasive data streaming out of reach for many organizations and leaves data pipelines clogged with stale and outdated data.

Analyst house IDC has said that businesses need to add more streaming use cases, but the lack of developer talent and increasing technical debt stand in the way.

“In terms of developers, data scientists and all other software engineers working with data streaming technologies, this is quite a new idea for many of them,” explained Kris Jenkins, developer advocate at Confluent. “This is significant progression onwards from use of a technology like a relational database.”

This all paves the way to a point where firms are able to create a so-called data mesh: A state of operations where every department in a business is able to share its data via the central IT function to aid higher level decision-making at the corporate operational level. In this meshed fabric, other departments are also able to access those real-time data streams — subject to defined policy access controls — without needing involvement from the original data originators.

What does Confluent offer developers?

In terms of product specifics, Confluent’s Stream Designer provides developers with what its makers call a “flexible point-and-click canvas” to build streaming data pipelines in minutes. It does this through its ability to describe data flows and business logic easily within the GUI.

It takes a developer-centric approach where users with different skills and needs can switch between the UI, a code editor and command-line interface to declaratively build data flow logic. It brings developer-oriented practices to pipelines, making it easier for developers new to Kafka to turn data into business value faster.

With Stream Designer software, teams can avoid spending extended periods managing individual components on open source Kafka. Through one visual interface, developers can build pipelines with the complete Kafka ecosystem and then iterate and test before deployment into production in a modular fashion. There’s no longer a need to work across multiple, discrete components, like Kafka Stream and Kafka Connect, that require their own boilerplate code each time.

After building a pipeline, the next challenge is maintaining and updating it over its lifecycle as business requirements change and tech stack evolves. Stream Designer provides a unified, end-to-end view to make it easy to observe, edit and manage pipelines to keep them up to date.

CEO Kreps’ market stance

Taking stock of the current state of what is clearly a still-nascent technology in the ascendancy, how does Kreps feel about his company’s relationship with other enterprise technology vendors?

“Well you know, this is a pretty significant shift in terms of how we all think about data and how we work with data — and, in real terms, it’s actually impacting all the technologies around it,” said Kreps. “Some of the operational databases vendors are already providing pretty deep integration to us — and us to them. That’s great for us, as our goal is to enable that connection and make it easy to work with Confluent across all their different systems.”

Will these same enterprise technology vendors now start to create more of their own data streaming solutions and start to come to market with their own approach? And if they do, would Kreps count that in some ways as a compliment to Confluent?

He agrees that there will inevitably be some attempt at replicating functionality. Overall though, he points to “a mindset shift among practitioners” in terms of what they expect and demand out of any new product, so he clearly hopes his firm’s dedicated focus on this space will win through.